The method employed in this project was developed collaboratively between myself and my company's global UX lead, leveraging the principles of desirability that we typically use in exploratory research to evaluate products that have been unsuccessful after launch. The method includes product evaluation against industry pains and needs, competitive analysis, and stress testing to scrutinize product value. Crucially, the method also gauges whether the product is targeting the right market audience to give the client a directional sense of their product’s market fit in 5 weeks.

In this project, the method was successfully applied by a small team of three, including myself, to evaluate the effectiveness of a client’s product. This project not only marked the adoption of a new evaluation method but also introduced a novel incubation structure for sales for my company.

It was extremely exciting to try new applications of method, and overall I really enjoyed collaborating on a project that challenged all of our problem-solving skills. During this project, I was also working as principal CX, overseeing our US East team. I was able to bring on someone from the team I oversee to support my global lead & I in execution of this method, so a big part of this project was also mentoring & teaching.

ITERATE

Developing & implementing a new method that uses both quantitative and qualitative analysis to evaluate established products that have been unsuccessful since launch.

HIT ME WITH THE HEADLINE:

My role:

Co-lead, Researcher, Mentor

UNDERSTANDING THE CHALLENGE

Our client came to us with a product that they had launched as a paid add-on within Salesforce— a one stop-shop solution for construction related project management. It had been created out of necessity, driven by the client's desire to consolidate their project management needs into a single platform, eliminating the hassle of dealing with multiple tools. After being unable to find a product that solved their pains, they built it.

They were led by an extremely dedicated and passionate leadership team who believed they were addressing genuine industry pain points. They had deep industry expertise, and they had placed their software in a popular marketplace for project management solutions. Despite these factors, the crucial question remained: why wasn't their product selling?

The Project Plan

The plan was as follows: a 5 week sprint that would evaluate the product, and provide a directional sense of why it was struggling to gain traction. Our UX global lead and I created a new method that would explore desirability along with product measures. The method would take into account pain evaluation, competitor comparison, prototype review, and segmentation exploration. We were scheduled for check-ins and a final readout to discuss insights and next steps with the client.

THE KICKOFF

We conducted a 2 day in-person kickoff with the clients to gain insight into their perspective, thoroughly reviewing the product, and gathering essential information to guide our research.

As this was a new approach, we needed to develop an entirely new kickoff process and create materials that melded the goals of product and desirability.

The kickoff focused on addressing what we refer to as the current 'assumptions,' which included identifying key stakeholders in the industry ecosystem, understanding industry pain points, uncovering pain points of their assumed users, and highlighting any unique aspects of their product.

In order to structure the kickoff effectively, I asked: 'What information does my team need to immediately begin our research tomorrow, in a way that ensures we deliver on our promised outcomes and achieve project success?' Using the outcomes as the priority learning objectives, I developed a detailed workflow using Miro.

I created new content tailored to this workflow with miro and in-person activities to guide clients towards our desired objectives. I kept our users in mind, avoiding presenting them with a blank canvas, and instead breaking down each question that would lead us to valuable insights.

THE RESEARCH

The interview was separated into sections: background (for segmentation analysis), pains & their prioritization, current solution use, prototype review of the client’s solution & a competitor solution, comparisons/ forced choice, and implementation. Here are some key components from each section:

Pains & prioritization

When evaluating pains, we began with a general question to understand their role related frustrations. Although this seems like a broad for product work, it served multiple purposes: further qualify the participants responsibilities to make sure we’re on target, understand whether or not the scope of the pains our solution covers is top of mind, and uncovered alternative pain points that may be worth addressing. Following this, we quantitatively prioritized pain using a money allocation method that we call the 'the $100 exercise'.

Current solutions

After learning about pains, we sought to understand how the participants currently mitigate those problems. Although this section is titled current system use, our focus was not on digital solutions or delving into specific features they might want us to develop. Instead, we applied desirability principles understand the ‘why’ behind how they currently solve their problems, whether they involved digital tools, spreadsheets, or other modules.We included this section for multiple reasons: to further validate the severity of the pain points they identified, to establish a baseline understanding of the '5 whys' for their company's decision-making process, and to identify any gaps in their current solves.

Prototype review

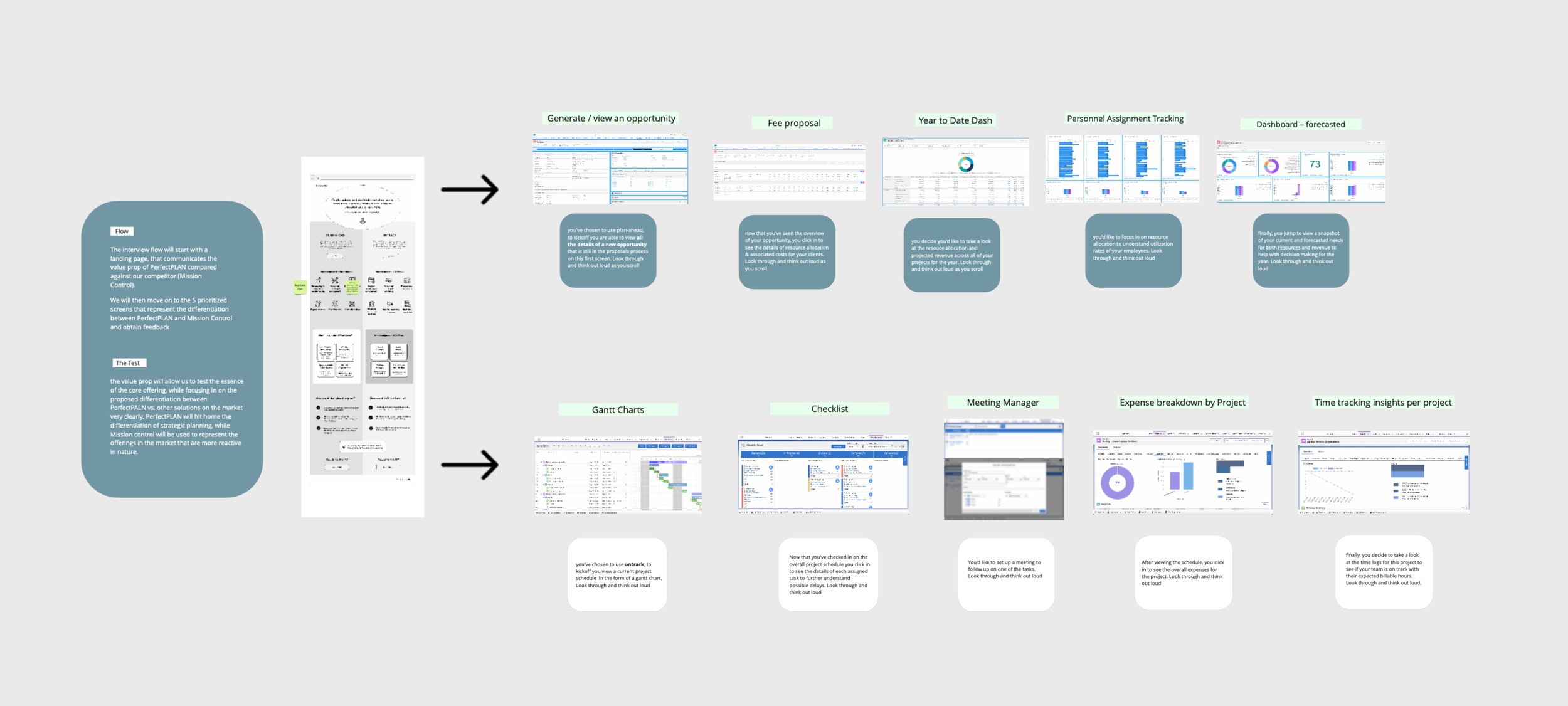

The prototype review also served as an input to our competitor review, we chose screens that best represented the differentiation of the client’s product to share with the participants. We also showed them differentiation of a competitors screens. We followed up with questions to get feedback for each screen, thoughts on improvements, and qualitative questions. I will dig into more of the details of the prototype design below.

Comparison & forced choice

In this section, participants were asked to choose which of the two options they believed would provide the most value and best suit their needs. We then followed up by asking them to consider this choice in including their current solution in the mix, leveraging the insights gathered from the 'current solutions' section to delve deeper and understand how our client's offering compares to other alternatives. We dug into perceived differentiation and value in this section.

Implementation

This last set of questions assessed decision making as it pertains to introducing new solutions in their company, stakeholder involvement, blockers of implementation, and purchasing.

After our kickoff, my team and I immediately began crafting scripts and developing prototypes. I led the script development process, conceptualizing the structure of the prototype test. For the interviews, we aimed to incorporate a competitor review. Given that the product was already established, we wanted to test it under conditions that mimicked how potential consumers would encounter it—not in isolation, but alongside other products in a lineup.

"The 'user' for this project didn't neatly fit into predefined role categories. Instead, I encouraged the clients to consider how they would classify their target user based on job responsibilities. This approach helped us identify a set of characteristics that defined our potential target user and served as a foundation for validation and further segmentation.

Interviews

Prototype Design

As mentioned above, we did a set of screens to represent the client’s solution and a competitor’s solution. To avoid bias, we did this under the guise of a new offering within a marketplace that helps consumers find products that best fit their needs. We offered up our client’s solution and a competitor solution as the ‘choices’ to support the user. Creating a narrative flow for the participant, we allowed them to walk through both solution options and share their feedback.

In this section of the interview, we allowed the participants to own much of the dialogue and control to mitigate as much bias as possible. After getting their uninterrupted feedback, we followed up with both quantitive and qualitative questions.

At this stage we introduced a new quantitive measure that I had been co-developing for sometime. The measure, which we call ‘desirability levels’ evaluates product interest progressively, starting with a small ask and raising the stakes to see where the users interest ends. We then quantify this interest and assess it along with the other quantitative and qualitative information to evaluate the product and consumer insights.

The flow I created to test the client’s solution against competitors:

THE ANALYSIS

The data for this project included both quantitative and qualitative information. There were multiple sets of quant data that needed to be evaluated in relation to the qual to get a clear picture of the results. Using raw data cuts, I took the lead on bringing to life both sets of data to communicate the insights and outcomes to our client. We shared this back in the form of a readout delivered through a deck.

The results indicated that there was a perceived value add from the client’s solution. However, that there are several existing blockers to system implementation and without addressing those barriers to entry, a new system may not provide a significant enough value to outweigh the hurdles of implementation. With this outcome in mind, we discussed with the client whether addressing blockers to implementation was in scope for them if they were to continue on the journey of optimizing their product to meet market needs.

Outcomes

The client’s were pleased with the thorough details of the results and decided to sign on for a next round, of barriers to entry and product development.